Our Open Confidential Computing Conference takes place on Mar. 12. Register now!

Blog

Great to have you back - welcome to the final episode of our mini-series! To recap, we discussed the basics of confidential computing in the first post and the basics of service meshes in the second post. Now it's time to put the two together.

We already established that there are two main problems when it comes to using normal services meshes for confidential computing:

> 1\. Encrypted service-to-service communication needs to terminate inside secure enclaves instead of separate sidecars. Otherwise an attacker could just tap the service-to-sidecar communication, manipulate the sidecar, etc.

>

> 2\. A crucial aspect of confidential computing is verifiability. Someone needs to make sure that each service in the cluster is actually running inside secure enclaves and that it was initialized with the right parameters and code.

Ok, the first one is easy. To fully understand the challenges with the second, let's examine the typical steps when running a simple confidential computing app:

1. Someone loads the app into an enclave on an SGX-enabled machine.

2. The users verify the identity and integrity of the enclaved app using SGX's remote attestation protocol, establish a secure channel, and transfer their data.

3. The data is processed securely inside the enclave and the result is returned over the secure channel.

Now let's assume we want to run this application in the cloud using a microservice architecture. Let's assume that we have three services: the web UI, the data storage, and the actual app logic.

Consequently, we have now to deal with at least three enclaves. Once we scale the app to meet the inevitable explosive growth in demand, there will be many more enclaves. (After all, one of the key benefits of a microservice architecture is often scalability.)

While functionally nothing has changed for the users, it has fundamentally from a security and verification perspective: they can still verify the web UI node they are talking to in step (2), but they don't know what is going on in the cluster beyond that. What other services are there that can access my data? Are all services running in enclaves? Etc.

A bad solution would be to expose all enclaves in the cluster to the users and have the users verify every single enclave and make sense of it.

Another solution would be to embed the verification part into the services themselves and let them verify each other and establish secure and trusted channels based on that. The users would possibly only need to verify a single enclave to establish trust in the whole distributed app.

This is already pretty good. But now each app would need to implement the service-to-service authentication alongside its actual business logic. So let's try to move all of that into some standard infrastructure component... wait!

As an observant reader of our previous post, this will seem familiar to you. It is the service mesh idea all over again!

This is why we need a service mesh for confidential computing :-)

So without further ado... here are the (hopefully now unsurprising) core concepts of MarbleRun:

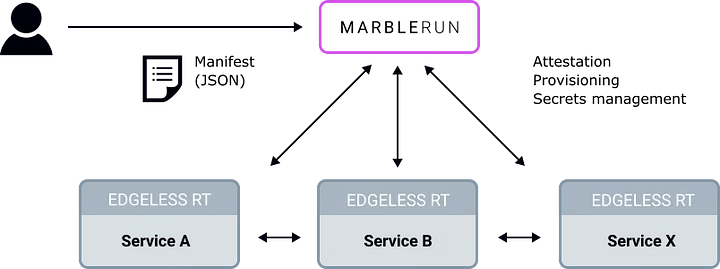

Instead of relying on separate sidecars, MarbleRun fuses the data-plane logic with the application logic running inside secure enclaves. Through this tight coupling, secure connections always terminate inside secure enclaves. We like to refer to containers running such enclaves as Marbles. (And hope you like too :-)

Before bootstrapping Marbles, MarbleRun verifies their integrity using Intel SGX remote attestation primitives. This way, MarbleRun is able to guarantee that the topology of the app adheres to the cluster's effective Manifest. Such a Manifest is defined in simple JSON and is set once via a RESTful API by the provider of the app. The Manifest defines allowed code packages, parameters, and more.

MarbleRun acts as a certificate authority (CA) for all Marbles and issues one concise remote attestation statement for the entire cluster. This can be used by anyone to verify the integrity of the distributed app. In essence, users can make sure that a certain Manifest is in place and everything is running on secure enclaves.

Finally, (and this is something that we haven't touched on so far ) MarbleRun manages different types of secrets for Marbles. This is in particular important in cloud environments, where normally SGX secrets are tied to specific CPUs. MarbleRun issues "virtual sealing keys" that still work when a Marble is rescheduled on a new host. MarbleRun also makes it easy to establish shared secrets between Marbles.

There is of course more --- like recovery features --- check out the documentation for details.

MarbleRun's control plane is written in 100% Go and (of course) runs inside a secure enclave itself. It is deployed as a regular container in your cluster --- for example using our provided Helm charts.

For now, Marbles can be developed in Go, Rust, or C++17 using our open-source Edgeless RT framework. Go is the primary language we use for developing enclave code at Edgeless Systems. We believe that Go is the best language for most cloud-native confidential apps. There are a couple of reasons for this and we'll discuss them in detail in one of our next blog posts. Existing Go code can be compiled to a Marble with little or no code changes required.

In the near future, we plan to add support for Graphene-based and possibly SGX-LKL-based Marbles. This will make it possible to "marbleize" complex existing services. Think Tensorflow or NGINX.

Final question: is MarbleRun replacing my normal service mesh?

--------------------------------------------------------------

Short answer: not at all.

In fact, we like to use MarbleRun in conjunction with the Linkerd service mesh. By design, MarbleRun only concerns with the confidential computing related aspects of your cluster and is transparent to Kubernetes and normal service meshes. From their perspective, MarbleRun's control plane is just another service. The only thing that changes is that regular sidecars cannot peek inside traffic any more. However, they are already used to that and can cope ;-) Load balancing, monitoring, etc. still work.

Next steps

----------

Alright, that's it. By now, we hope that you are as excited as we are that there finally is a service mesh for confidential computing! If you'd like to take MarbleRun for a spin, we recommend you follow the quickstart guide and deploy a fun, scalable, and super-secure web app called "emoji.voto" on SGX-enabled Kubernetes. Fun fact: emoji.voto is also the default demo of Linkerd.

Happy emoji voting (beware of the 🍩)!

PS: everything we discussed is free and open-source. Looking forward to your issues, PRs, and stars.